Its creators pose a challenge to machine vision researchers: use the information to make assistive technology better.

by Emerging Technology from the arXiv February 27, 2018

One of the hardest tasks for computers is “visual question answering”—that is, answering a question about an image. And this is no theoretical brain-teaser: such skills could be crucial to technology that helps blind people with daily life.

Blind people can use apps to take a photo, record a question like “What color is this shirt?” or “When does this milk expire?”, and then ask volunteers to provide answers. But the images are often poorly framed, badly focused, or missing the information required to answer the question. After all, the photographers can’t see.

Computer vision systems could help, for example, by filtering out the unsuitable images and suggesting that the photographer try again. But machines cannot do this yet, in part because there is no significant data set of real-world images that can be used to train them.

Enter Danna Gurari at the University of Texas at Austin and a few colleagues, who today publish a database of 31,000 images along with questions and answers about them. At the same time, Gurari and co set the machine-vision community a challenge: to use their data set to train machines as effective assistants for this kind of real-world problem.

The data set comes from an existing app called VizWiz, developed by Jeff Bigham and colleagues at Carnegie Mellon University in Pittsburgh to assist blind people. Bigham is also a member of this research team.

Recommended for You

How the AI cloud could produce the richest companies ever

How to manipulate Facebook and Twitter instead of letting them manipulate you

The scientist who gave Cambridge Analytica its Facebook data got lousy reviews online

A self-driving Uber has killed a pedestrian in Arizona

A startup is pitching a mind-uploading service that is “100 percent fatal”

Using the app, a blind person can take a photograph, record a question verbally, and then send both to a team of volunteer helpers who answer to the best of their ability.

But the app has a number of shortcomings. Volunteers are not always available, for example, and the images do not always make an answer possible.

In their effort to find a better way, Gurari and co started by analyzing over 70,000 photos gathered by VizWiz from users who had agreed to share them. The team removed all photos that contained personal details such as credit card info, addresses, or nudity. That left some 31,000 images and the recordings associated with them.

The team then presented the images and questions to workers from Amazon’s Mechanical Turk crowdsourcing service, asking each worker to provide an answer consisting of a short sentence. The team gathered 10 answers for each image to check for consistency.

These 31,000 images, questions, and answers make up the new VizWiz database, which Gurari and co are making publicly available.

The team has also carried out a preliminary analysis of the data, which provides unique insights into the challenges that machine vision faces in providing this kind of help.

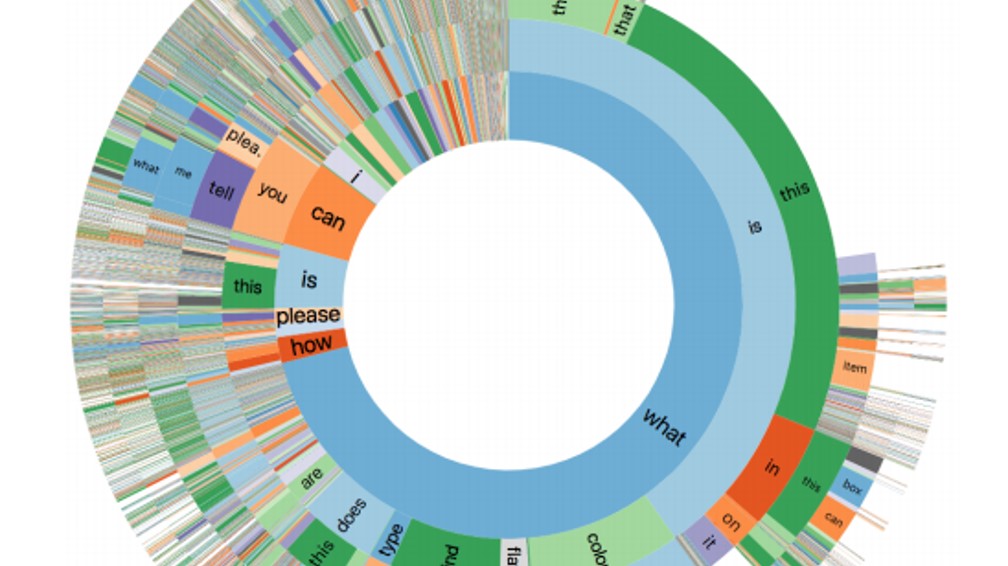

The questions are sometimes simple, but by no means always. Many questions can be summarized as “What is this?” However, only 2 percent call for a yes-or-no answer, and fewer than 2 percent can be answered with a number.

And there are other unexpected features. It turns out that while most questions begin with the word “what,” almost a quarter begin with a much more unusual word. This is almost certainly the result of the recording process clipping the beginning of the question.

But answers are often still possible. Take questions like “Sell by or use by date of this carton of milk” or “Oven set to thanks?” Both are straightforward to answer if the image provides the right information.

The team also analyzed the images. More than a quarter are unsuitable for eliciting an answer, because they are not clear or do not contain the relevant info. Being able to spot these quickly and accurately would be a good start for a machine vision algorithm.

And therein is the challenge for the machine vision community. “We introduce this dataset to encourage a larger community to develop more generalized algorithms that can assist blind people,” say Gurari and co. “Improving algorithms on VizWiz can simultaneously educate more people about the technological needs of blind people while providing an exciting new opportunity for researchers to develop assistive technologies that eliminate accessibility barriers for blind people.”

Surely a worthy goal.

Ref: arxiv.org/abs/1802.08218 : VizWiz Grand Challenge: Answering Visual Questions from Blind People